Could AI convert all the PDF forms on GOV.UK into web forms?

02 May 2025

Last year I wrote about a tool I built that uses AI to generate GOV.UK styled web versions of PDF forms.

This is useful because web forms are more accessible, mobile friendly and smarter than document-based forms.

They reduce error rates and processing time and enable things like automation and identity verification.

In this update I'll consider what it would be like to use the tool to convert all the document based forms on GOV.UK.

First, here's a short video of the tool in action:

NOTE: The tool uses Google Gemini Flash 2.5 now not Claude, which has made it about 4 times faster.

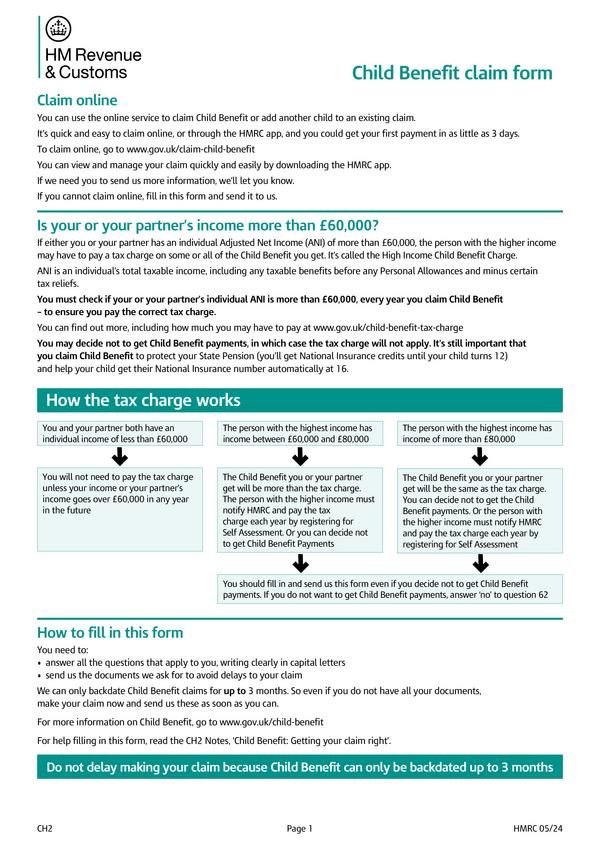

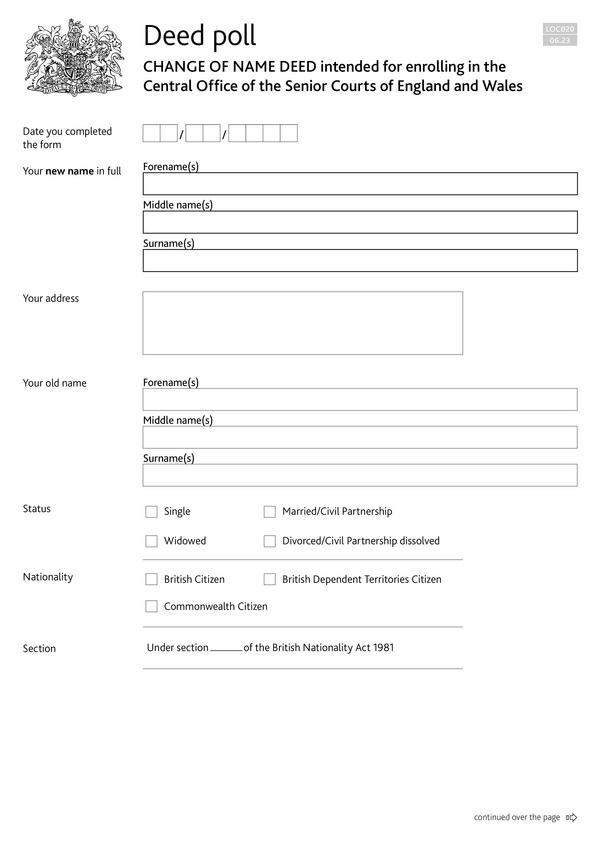

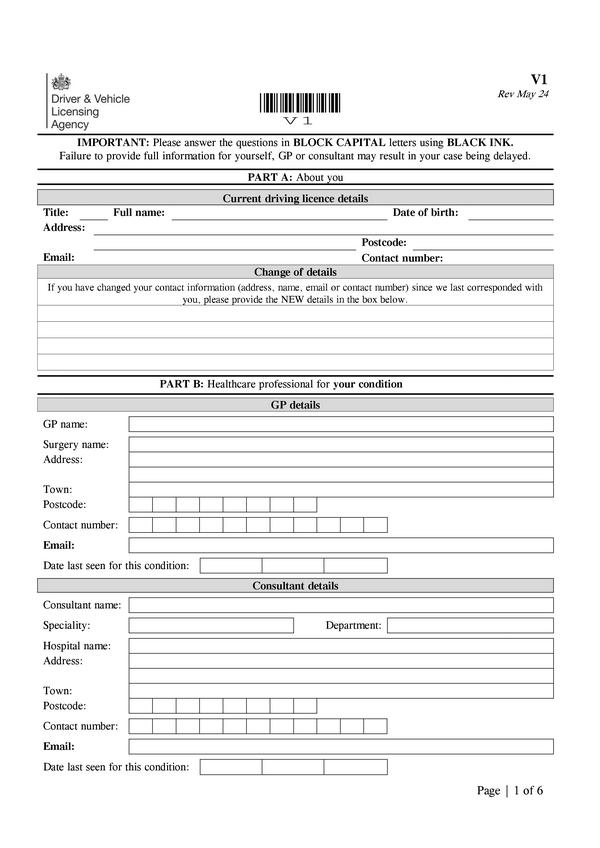

Below are 6 examples of the forms I've been testing and improving the tool with. They link to side-by-side comparisons of the original PDF and the generated form:

How does it work again? #

The tool sends the form to a large langage model (in this case Google Gemini), together with a JSON schema that describes what it should be looking out for.

The model returns JSON that represents it's best guess at the form structure, and the tool uses that to construct a web form based on standard GOV.UK components.

Here's how I describe a form question in general:

...A question typically consists of a question title, some optional hint text and one or more form fields. Form fields typically look like boxes. Regions of the image can only belong to one question. Ignore any regions titled 'For official use only'.

And here's how I help it decide what kind of question it is:

...It's an information_page if there are no form fields on the page - convert the contents of the page to Markdown syntax. It's a number if the question is asking how much or many of something there is, or for a numerical quantity. It's a text_area if the question includes a large box for writing multiple lines or paragraphs of text. It's a date if it contains date fields like day, month, year. It's a multiple_choice if the question contains multiple square boxes and text like 'Tick the boxes' or 'Select all that apply'. It's a single_choice if the question contains multiple square boxes and text like 'Tick one box' or 'Select one option'. It's a yes_no_question if the question requires a yes/no response and contains two boxes. It's a time_period if the response required is in years, months, days hours, minutes or seconds.

Processing all the forms on GOV.UK #

I analysed the tool's performance on the 6 forms above (the details are in the appendix).

Based on that analysis I think that a tool like this could process 1,000 forms per minute. That would cost about 50p and use 6 kWh of electricity.

So, what would it take to process all the forms on GOV.UK?

There are over 10,000 of them. Let's double that to be on the safe side, and to factor in all the testing of the tool.

Processing 20,000 forms would cost £10, take about 20 minutes and use about 120 kWh of electricity.

If my estimates are close, that's equivalent to about 2 weeks worth of an average UK household's electricity usage, so not insignificant.

The good news is that this should be a one-time cost.

Unlike applications of AI that create an ongoing dependency with the technology, once a PDF form has been converted, it stays converted.

OK, but are the forms any good? #

Well, yes and no. It has a good go at anything you throw at it, but it usually gets something wrong. Like mistaking hint text for help text, or radios for checkboxes.

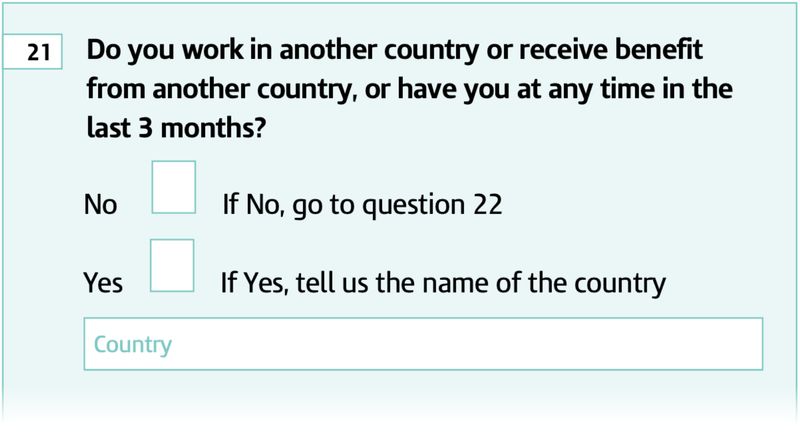

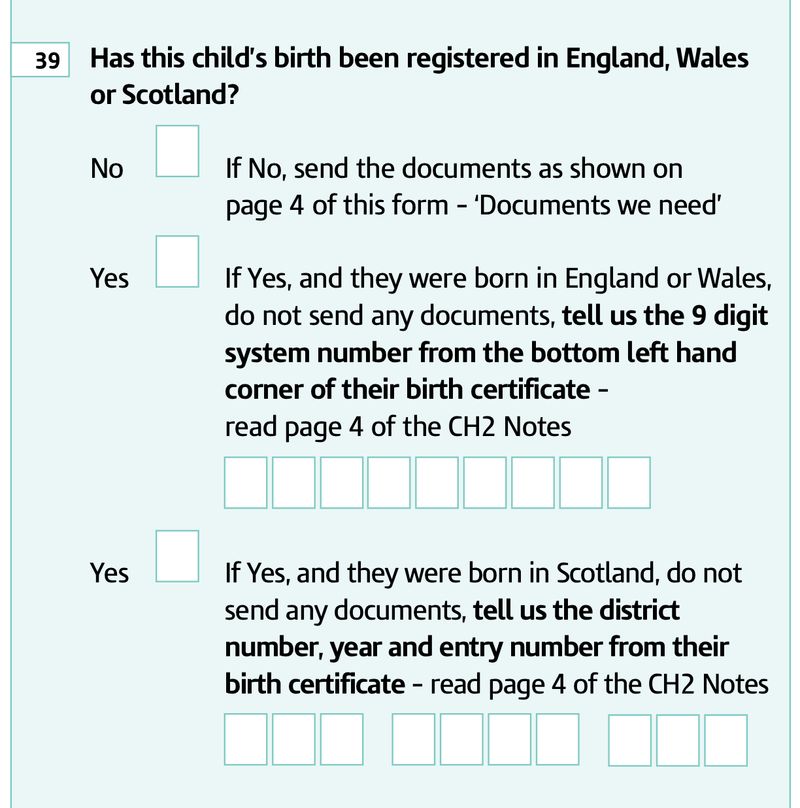

Here's a good example from this form of the kind of question it currently struggles with:

Whether or not the user answers the 2nd part of the question depends on how they answer the first part.

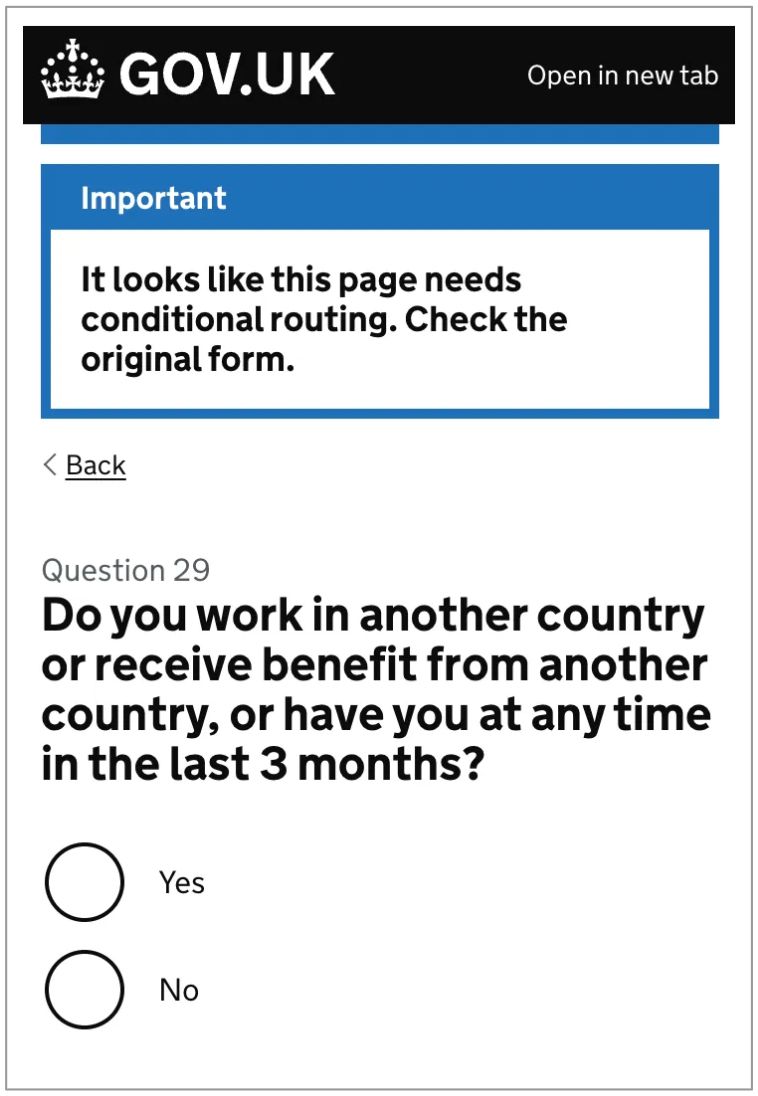

Right now the tool will often spot conditional questions, but all it can do is flag them to the user, like this:

Here's an even more complex example from later on in the same form:

The tool doesn't stand a chance here, it just dumps all the text behind a help link, like this:

I think that some of these limitations could be overcome by better prompting, a bigger library of components to draw on and by implementing step-by-step reasoning.

But I also think there are some more fundamental limitations that are nothing to do with AI and everything to do with service transformation.

Some PDF forms just don't translate to the web. Many should be completely redesigned, or scrapped entirely.

That requires stepping back and looking at the whole service - restructuring and rewriting forms, designing new processes, convincing stakeholders, and so on.

Right now, only people can do that.

Conclusion #

To be really useful a tool like this would first need to be integrated into a form builder like GOV.UK Forms, because every form it produces needs at least some tweaking.

Processing all the forms on GOV.UK would be very satisfying, but wouldn't deliver any value until people started to finish designing each form, one at a time.

But what I hope is that a tool like this could lower the bar enough to kickstart that kind of systematic transformation effort in departments.

Right now, a department faced with thousands of PDF forms could be forgiven for throwing their hands up in despair and filing the problem under ‘Too Hard’.

But what if we could get the ball rolling for them by importing all those PDFs into a form builder, albeit in an imperfect and unfinished state?

What if the Digital or UCD teams in departments could take ownership of those forms; deciding if, when and how to finish and publish them?

I'm optimistic that we can find responsible ways to use generative AI to accelerate service transformation, and that this experiment shows one way it could happen.

What's next? #

I want to see if I can address some of the examples of conditional questions, to show how they might be dealt with.

I'd also like to add the ability to describe a form in words, instead of just via a PDF doc. Then people could create new forms as well as converting existing ones.

Tim Paul

Appendix #

Here's how I estimated the processing speed, cost and energy usage. I'm not a data analyst - please let me know if I could improve this!

Processing speed #

The average PDF form in my test batch was 7.43 pages long, and each page took on average 4.75 seconds to process. However, pages can be processed in parallel, so it’s not a case of multiplying those 2 numbers together.

The real limits on processing time are the rate limits imposed by the LLM providers, with the limit on tokens per minute being the most constraining one.

The average PDF form in my test batch took 24,329 tokens to process. Based on Gemini's current rate limits, the limits on form processing speed are as follows:

- Tier 1: 164 forms per minute

- Tier 2: 411 forms per minute

- Tier 3: 1,233 forms per minute

(You graduate to Tier 2 once you’ve spent $250 and Tier 3 once you’ve spent $1,000).

So, assuming that you're at Tier 3, you ought to be able to generate 1,000 forms in a little under a minute.

At Tier 1 it would take about 6 minutes instead - a negligible difference in the grand scheme of things.

Processing cost #

According to Google’s pricing info, it currently costs $0.15 to process 1 million input tokens and $0.60 to process 1 million output tokens.

The average PDF form in my test batch used 19,303 input tokens and 5,026 output tokens, resulting in a total cost of about $0.59 per 1,000 forms.

Energy usage #

My estimate is based on this analysis from Epoch AI, that an LLM uses around 2.5 watt-hours of energy for every 10,000 tokens it processes.

I do wonder though if the the figure for processing image-based input tokens might be higher, and would welcome any insights about this.