Using AI to generate web forms from PDFs

13 May 2024

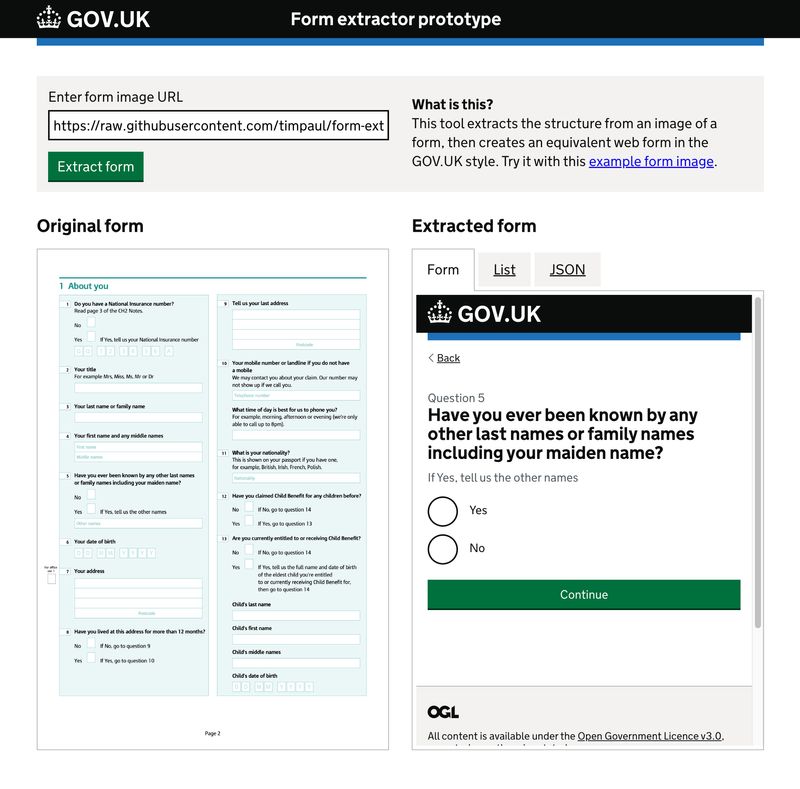

I made a little AI experiment recently - a tool that takes an image of a PDF form and generates an equivalent multi-page web version of it. The code is here on Github.

It worked better than I’d anticipated, so I thought I’d write things up here. First, here's a little demo video:

As you can see, it's currently styled like GOV.UK (I work at GDS), but it's very much a personal side project for now.

OK, here's how I made it...

Step 1: testing the concept #

Last November, inspired by Kuba's AI prototype generator, I'd idly wondered if an LLM could extract the structure from a PDF form and then generate a web form from it.

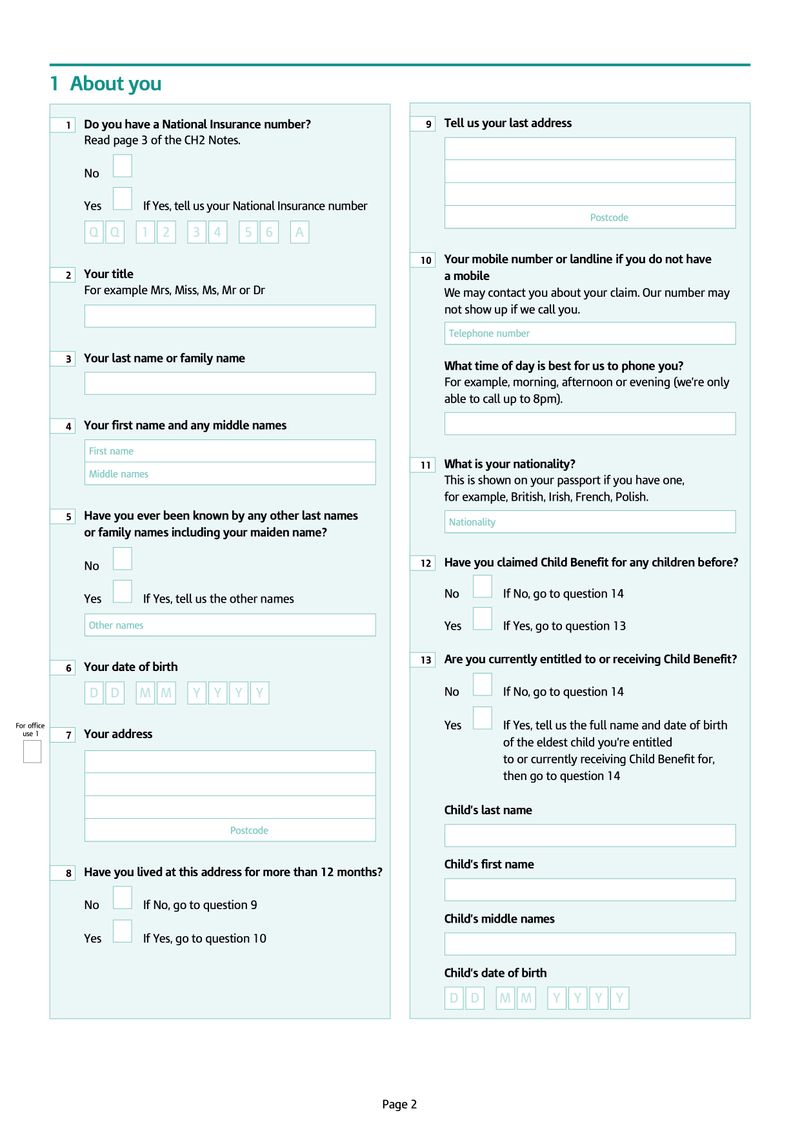

I was playing with the Claude AI chatbot recently and decided to have a go, so I uploaded this image:

Then I gave Claude the following prompt:

This is an image of a form. Please output all of the questions in the form as a JSON array of objects. The array has the key "pages" and each question has the keys “id”, “question_text”, "hint_text" and "answer_type". The value of "question_text" is the title of the question. The value of "hint_text" is any hint text for the question, or null if there is no hint text. The value of "answer_type" is one of the following: "number", "email", "name", "national_insurance_number", "phone_number", "organisation_name", "address", "date", "selection", "text”. {

And it worked! Here's what it returned:

{

"pages": [

{

"id": 1,

"question_text": "Do you have a National Insurance number?",

"hint_text": "Read out 3 if the QR Notes",

"answer_type": "selection"

},

{

"id": 2,

"question_text": "Your title",

"hint_text": "For example Mr, Mrs, Miss, Ms, Mx or Dr",

"answer_type": "selection"

},

{

"id": 3,

"question_text": "Your last name or family name",

"hint_text": null,

"answer_type": "name"

},

{

"id": 4,

"question_text": "Your first name and any middle names",

"hint_text": null,

"answer_type": "name"

},

... and so on

This gave me the confidence to proceed to the next step and try and build something.

Step 2: building the tool #

The JSON returned by the chatbot was pretty accurate, but didn't always validate, making it too unreliable to build on top of.

However, I knew that the Claude API has a 'Tools' feature that lets you send a schema along with your image and prompt. In other LLMs this is known as function calling.

The schema defines precisely the kind of JSON that you'd like Claude to return. You can also include descriptions of each element to help Claude apply it correctly.

Here's a snippet of the schema I created:

"question_text": {

"type": "string",

"description": "The title of the question. Questions titles are often bigger or bolder than the surrounding text."

},

"hint_text": {

"type": "string",

"description": "Any hint text associated with the question. It often appears immediately below the question title, in a smaller or lighter font."

}

So I switched to using the API and built a little web interface to send the schema, image and prompt.

Again, it worked pretty well - the JSON was now valid 100% of the time, which meant I could use it to generate HTML forms.

Rather than try to replicate all the fields in the orginal form, the tool identifies the question type then uses the relevant UI component from the GOV.UK Design System.

This is a feature not a bug. The idea is not to just faithfully copy the original form, but to standardise and improve it as well.

What it can and can't do #

Right now, the tool can:

- extract forms from PDFs and images

- use either OpenAI or Anthropic LLM models

- break a form down into questions

- recognise when an image isn't a form

- distinguish between radios and checkboxes

- distinguish between question, hint and field text

- recognise question types like 'name' and 'address'

- recognise when a question has conditional routing

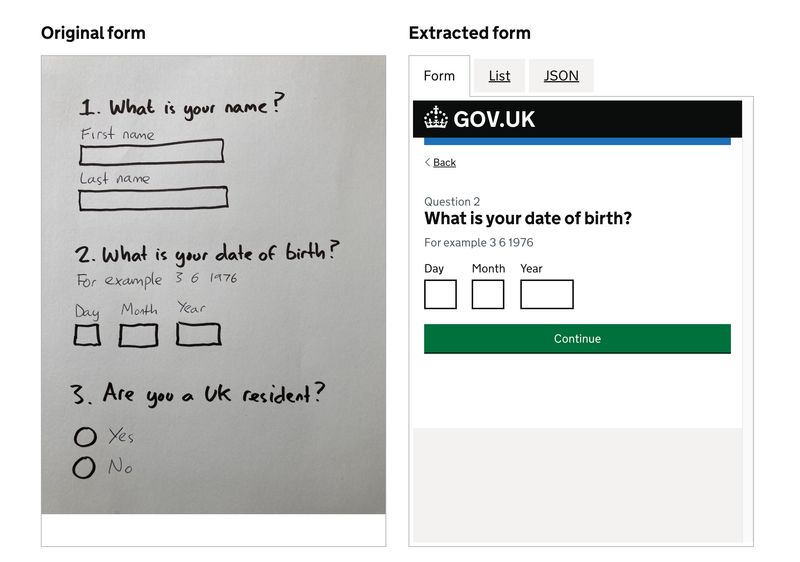

- process hand drawn forms

But it can't yet:

- do any of those things with 100% accuracy

- replicate complex or unusual question types

- implement conditional routing

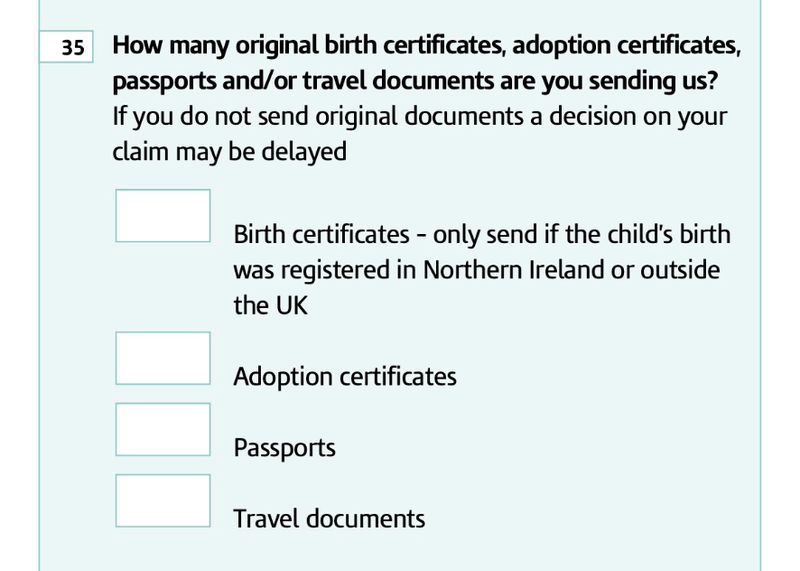

It also makes interesting and sometimes quite human mistakes. For example, mistaking the fields in the image below for checkboxes (they're number fields).

I've gradually tweaked the prompt and the schema descriptions and this has improved things somewhat, but it's unlikely to ever be 100% accurate.

Also, web forms are just different to PDF forms. There's no guaranteed one-to-one mapping of question types.

Some decisions about how to interpret a question for a web form context will depend on factors the LLM has no knowledge of, like business constraints.

Finally, genuine service transformation often requires a radical reimagining of old forms. Starting from scratch may be the better option in some cases.

For these reasons, I think tools like this might always work best as enhancements of more traditional products. In this case, a form builder like GOV.UK Forms.

With this approach a human can always go in afterwards and correct mistakes, remove cruft, switch things up etc.

Some observations #

Building the tool, and then discussing it with people, has been a really valuable experience. Here are five thoughts about that.

1. The AI is the easy bit #

OK, not exactly. The work happening inside Anthropic, OpenAI etc is anything but easy.

But their documentation and APIs are great, which makes our job of integrating them surprisingly simple. I’m no developer, but I got the AI part working in about an hour.

What took longer was the other stuff: identifying the problem, designing and building the UI, setting up the templating, routes and data architecture.

It reminded me that, in order to capitalise on the potential of AI technologies, we need to really invest in the other stuff too, especially data infrastructure.

It would be ironic, and a huge shame, if AI hype sucked all the investment out of those things.

Finally, form digitisation is only one of the things holding up the transformation of public services.

What really slows transformation is beaurocracy. It's getting permission to use a tool like this, and to make improvements to the underlying service.

2. LLMs can surprise you #

The Claude API only accepts images, not PDFs. This annoyed me at first, until I realised you could send it any image - even one of a hand drawn sketch of a form.

Incredibly, it still worked! So suddenly there was this whole new, unanticipated use case - going from a sketch of a form to a web form in one step.

It really made me appreciate the combinatorial power of these discrete new capabilities we're developing.

3. People worry about scammers #

When I shared this work a few weeks ago I got a lot of positive feedback, but also some concern.

A few people worried that I was making it easier for scammers to make fake government websites.

Unfortunately scammers can already make convincing fake websites without my help.

Adapting this tool for that purpose requires the same skills as making a fake site from scratch - there’s no real advantage.

I think the best way to tackle fake websites is to get them taken down quickly, and to teach users how to spot them (clue: check the domain).

4. People worry about spammers #

People also worry that a tool like this would encourage organisations to digitise their old forms in bulk, without bothering to do any underlying service transformation.

I worry about this too. If you’re time poor, on a limited budget, or don’t have a user-centred mindset, it must be tempting to cut corners.

Personally, I’d rather we implement the time-saving tool and the right funding, governance and culture, than miss the opportunity to do things better and more efficiently.

We could also build guard rails and defaults into tools like this, to nudge people beyond mere digitisation and towards genuine transformation.

For example, we could integrate a question protocol into the tool, to encourage reduced data collection (thanks to Caroline Jarrett for prompting this idea).

Whether we decide to do all this is another thing.

5. Gen AI + standards FTW #

Generative AI is spookily good at, well, generating stuff. It reminds me of the divergent phase of the design process.

But you also need the convergent phase - you need to winnow down all those possibilities into only the ones that meet your chosen constraints.

This is where good standards come in. For this project the GOV.UK Design System was the perfect formal representation we needed for our UI.

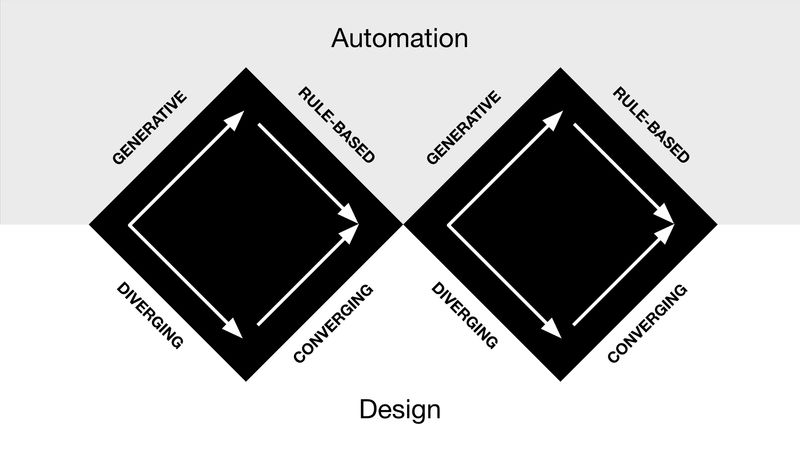

All this got me thinking about the double diamond, and whether there's an analogous approach for automation:

The design double diamond has alternating diverging and converging phases. Would an automation double diamond alternate between generative and rule-based systems?

Perhaps that's pushing the analogy too far - but I think that diverging/converging might be a useful strategy for applying generative AI in a safe and predictable way.

What's next? #

For now this is just a personal experiment, but hopefully one that demonstrates the potential of applying LLMs to use cases like this.

I'll continue to tweak and extend it's capabilities, and you're welcome to join me in that on Github.

And feel free to hit me up on Twitter, Bluesky or LinkedIn if you have any questions.